Artificial Intelligence in Recent Years

Progress and Distractions

The world has been captivated by the promise of AI since Alan Turing wrote his influential paper on the matter, Computing Machinery and Intelligence in 1950 [1]. Great advancements have been made in the field up until now, from the novel gimmicks of the 1960s, such as ELIZA [2], to more genuine use cases of the twenty first century. It is clear, from some of the prominent examples that are to be explored in this paper, that the research and public consciousness of AI has moved beyond attempts to mimic human behaviour which Alan Turing talks of in his writings on The Imitation Game, and MIT implements in the ELIZA program. It has expanded into areas that are beyond the ability of the human being, and can mimic natural human abilities for use in ways which a human cannot be of service (e.g. unlocking someones’s personal phone using facial recognition [3]). The last five years have seen considerable advances in AI research and commercial implementation which cannot be understated. Such advancements however have not come without an almost equal amount of over-exaggeration of the ability and achievement of this area of Computer Science. A prime example of the over-exaggeration in achievement is the claim that a chat-bot named Eugene beat the Turing test in 2014 [4]. Disregarding the fact that Turing never stated that the test is considered ”beaten” if a program is judged to be human after five minutes of conversation, The conversations between the judges and Eugene seem to be very easily distinguishable from standard human discord within only a few exchanges [5].

Significant AI Applications

IBM Watson

The IBM Watson system came to prominence in 2011 when IBM entered the computer into the popular US game show Jeopardy!. Watson beat the TV show’s best contestants in its history by a wide margin [6] proving the technical achievement of the system’s natural language and search technologies.

IBM Watson uses natural language processing, not just to understand the question that it is being asked, but also the data that it is being tasked with understanding. These natural language processes are combined with machine learning to ensure that it is reading its input data in the correct way [7].

After their success on Jeopardy!, IBM has moved on to using Watson in more recent years for more practical purposes, applying the power of the Watson system to areas such as health, cooking and security amongst other industries [8]. In the field of healthcare for example, Watson is being used to take information from a tumor biopsy and search medical literature to find research that is most relevant to the patient’s needs. This collated research can increase the efficiency of the treating doctor allowing them to improve the quality of their care.

I would argue that IBM Watson is one of the greatest breakthroughs in the field of Artificial Intelligence since the area was first conceived. Where other systems may take advantage of a few AI algorithms, Watson has been able to utilise natural language, machine learning and other important fields of work together in a system that has great potential to be commercially viable.

AlphaGo

AlphaGo is a program developed by google which has been designed to play the ancient game of Go from China. The game of Go was considered very difficult for computers to play due to the incredibly high number of possible moves that are available to the player at any point during the game [9].

Unlike previous game playing computers which often employ pre-programmed rules to decide how best to proceed, AlphaGo took a more modern approach of using neural networks to help in deciding its next move [10]. AlphaGo combines the more modern approach of Convolutional Neural Networks with a traditional gameplay algorithm, the Monte Carlo Tree Search. The system uses two neural networks, one for evaluating how well each player is currently doing and one for deciding what play to call next. In combining Neural Networks with Monte Carlo Tree Search, AlphaGo is able to reduce the amount of the policy tree that it needs to search since the neural network has learnt which part of the tree does not hold a viable next move. To improve the quality of the program’s play ability, the researchers started by using human players to challenge the computer giving it a general understanding of how a skilled player would operate. They then let the computer play against itself in which after finishing a game the computer would adapt itself to act more like the winning version of itself

The program came to prominence in the world media after it managed to beat Lee Se Dol, a 9 dan Go player, the highest professional level [11]. This was considered to be an incredible achievement similar to that of Deep Blue’s conquest over Garry Kasparov almost a decade earlier [12].

Although definitely an impressive feat of engineering, I would argue that the significance of this program has been largely overstated. A claim that is largely repeated in the press of this event is that a program of this kind was believed to be almost a decade away. This claim seems to originate from Google’s publication in the Nature Journal on AlphaGo. Of the sources that the paper uses for this claim, one of them never mentions the time frame for when a program of this kind would be created [13], another was published nearly 20 years ago, well before the rise in the prominence of neural network application [14] and the last is not written by a computer science expert [9].

In truth, this program was inevitable given the technology and algorithms we currently possess. The main algorithms that are employed in AlphaGo, Monte Carlo Tree Search and Artificial Neural Networks have existed for over a decade [15] [16] and the hardware capable of handling such a program has existed for several years.

This program also does not significantly move forward the field of artificial intelligence in any way, given the nature of how the system works. Unlike the work of IBM’s Watson, there does not seem to be any push at Google to re-purpose the AlphaGo program to more meaningful products, and it is unlikely for there to ever be, due to the program’s very narrow design scope. In this sense, AlphaGo is an interesting technical achievement but not a revolutionary one.

Self-Driving Cars

With the rise in prominence of neural networks, the algorithm has been applied to many interesting new applications including the revolutionary area of self-driving cars. Self-driving cars use a combination of 3D image recognition and the power of neural networks to drive a car on real roads. Google has again been leading the way with this technology under their Waymo subsidiary [17].

Waymo combines many neural networks together in order to make sense of the real world and perform actions that fit with the information that it is being given. The process in which Waymo understands and works with its input data is broken into several steps, the first step works out the shape of an object, the next recognises what that object might be and what additional information this object might be giving, for example, recognising not just a road sign but also the meaning of the sign. The ability of the car to recognise an objects intentions is not limited to static objects either [18]. The system can also recognise more dynamic communication such as a car using its indicator or even a cyclist using arm signals. By breaking down the steps of the self-driving process, the software engineers have been able to put some levels of abstraction between the real world and their software. This is important for giving the system a greater amount of training data to work with. The program can now run simulations of the environment and work out how it should react in different situations without ever going out into the real world, not only reducing the risk to other drivers during the learning stage but also creating new situations so that it can know what to do, before ever facing the circumstances in reality [19].

Many have argued that this technology has the potential to revolutionise our entire society \by helping those who cannot drive themselves, but also, more worryingly, by putting the jobs of those in the significantly large transport sector at risk [20]. With major companies working on self-driving cars and other driving technologies, it is no surprise that many people are worried about the future consequences of such products [21].

I believe that, to an extent, the disruptive power of self-driving cars has been overstated too, and many of the arguments put forward for how autonomous vehicles are going to destroy jobs in the transport industry are missing some potential issues with the technology and society itself which could slow the adoption of such vehicles.

In order to take anyone’s job, it is a necessity for these vehicles to be fully autonomous without ever requiring a driver to take control of the wheel. After all, no one would consider flying on a plane without a pilot, even though many modern planes can fly on auto-pilot from take-off to landing, because should any complication arise, a pilot must be there to intervene. Unfortunately, fully autonomous vehicles have to go through a much more stringent learning process than their auto-pilot counterparts. Currently, only a handful of American states have legalised the use of self-driving cars for testing purposes [22], and as such most of the training data that these cars are using come from similar roads. This poses a serious problem as these cars expand the land to which they are permitted to traverse. Many areas of a road network have rather unique characteristics, meaning that getting a significant amount of data on handling such areas may be quite difficult.

|

|

|---|---|

| Marked Road | Unmarked Road |

Figure 2: Changing road markings on Welford Road, Leicester (Courtesy of Google Maps)

Figure 2 shows that a clearly marked road can change design quite significantly in a relatively short amount of time into a highly confusing road with no clear marking between each lane. Although a self-driving car would not have many issues with getting used to a road such as this after a little while driving in the city of Leicester, it does not answer the question of how such a car could handle the less traversed and likely more unique areas of a road network. In reality, such problems for fully autonomous cars could be mitigated by ensuring that the vehicle does not enter a part of the road network that it does not have much experience on. This will still allow the car to traverse the most important routes for the majority of people.

Another potential issue with this kind of technology is that it needs to mitigate the actions of other drivers. When it comes to driving, humans have many failings. They often make mistakes, but they also sometimes deliberately act unreasonably. They often break minor traffic rules to get an advantage over other cars [23]. I believe they would act even worse when they know a car is programmed to behave well. Given that the behaviour of other drivers may differ between when they are around human or computer drivers, the self- driving car might start getting training data that is being skewed by its own presence. It is difficult to say how this might affect the quality of cars ability if at all, but it is something that should be taken note of none the less.

Finally, probably one of the greatest counterpoints to why self-driving cars will not take as many jobs as some have feared, is that a drivers job is rarely if ever solely about driving. Although the primary job of a lorry driver is to drive the lorry, they are also responsible for ensuring that the truck safely makes its way through border controls and that the goods inside the truck have not been stolen. When they arrive at the destination they are often responsible for handing off the goods from one party to another [24]. Similarly in the taxi industry, although the primary purpose of the driver is to drive the cab, it is also their responsibility to ensure that the passengers have paid, to keep their cab clean and to ensure that the passengers behave [25]. It is unlikely that a paying passenger is going to be willing to enter a cab on a busy Friday night if the duties of cleaning have not been adequately taken care of.

Apple’s Siri and other Personal Assistants

In 2011 Apple released the first version of their personal assistant program Siri. Since then, there has been a large rise in the number of personal assistant programs from all the major tech companies including Google, Samsung, Facebook and Microsoft. The major selling point of these products is that users can control aspects of their system using natural language, supposedly making it easier to perform tasks.

The software used in these personal assistants does not differ to a great extent from the work of ELIZA over 50 years before. The major differences of modern systems is that they can now be spoken to rather than typed to, and that these programs can process and perform actions based on the information the user has given.

A great deal has been written by tech journalists about the power of these personal assistants. A simple search on Google’s news section yields over two million results on the subject. This represents one of the major problems with understanding the extent in which this technology has changed modern life. The novel application, wealth of features and witty responses that are programmed into systems like Siri make it easy click-bait for modern online journalists [26], but in the real world Siri and its contemporaries from other companies have done very little to change how people use their phones. As Carolina Milanesi found in a study she ran for Creative Strategies, 70% of active iPhone users had used Siri only sometimes or rarely [27]. Milanesi states that one of the main barriers to people using this technology is that people feel uncomfortable talking to a machine, especially when other people can hear them.

I do not believe that cultural issues are the only aspect of personal assistants that is hindering their mainstream adoption however. Issues with user experience design as well as the still frustrating lack of understanding in the natural language processing largely stop this product from ever being adopted without significant improvements.

Show, Don’t tell, Has been one of the most important discoveries of user experience design since the 1980s, and it has been largely overlooked by the developers of todays personal assistants. In the case of personal assistants, users are often neither shown or told how to use the system, giving them a highly confusing experience.

> Figure 3: Empty Siri Homescreen

> Figure 3: Empty Siri Homescreen

As can be seen in Figure 3, upon opening Siri the user is greeted with a totally empty screen with absolutely no indication of what capabilities the application holds. The user needs to ask for help or look up the systems capability in order to even start using it.

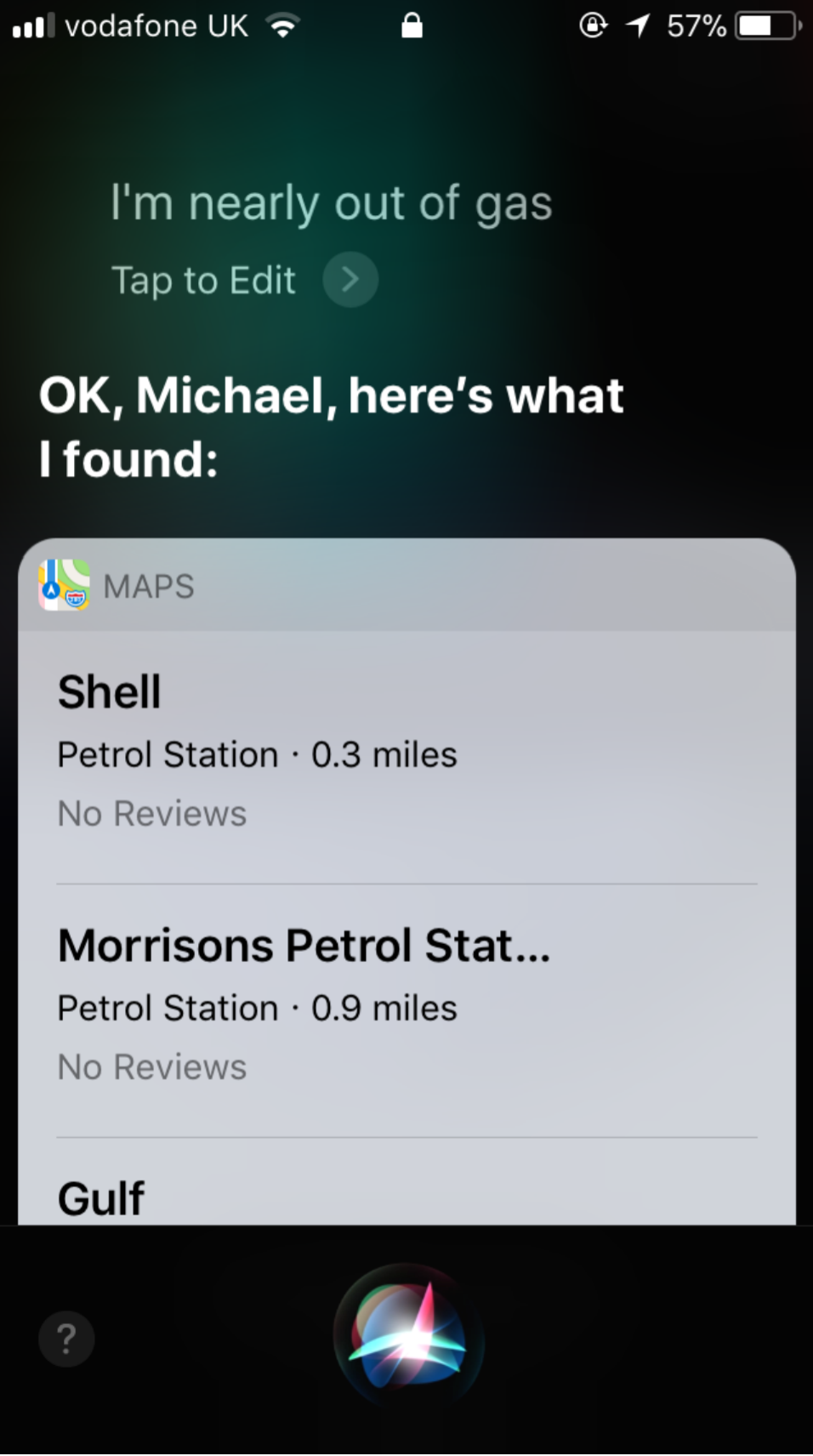

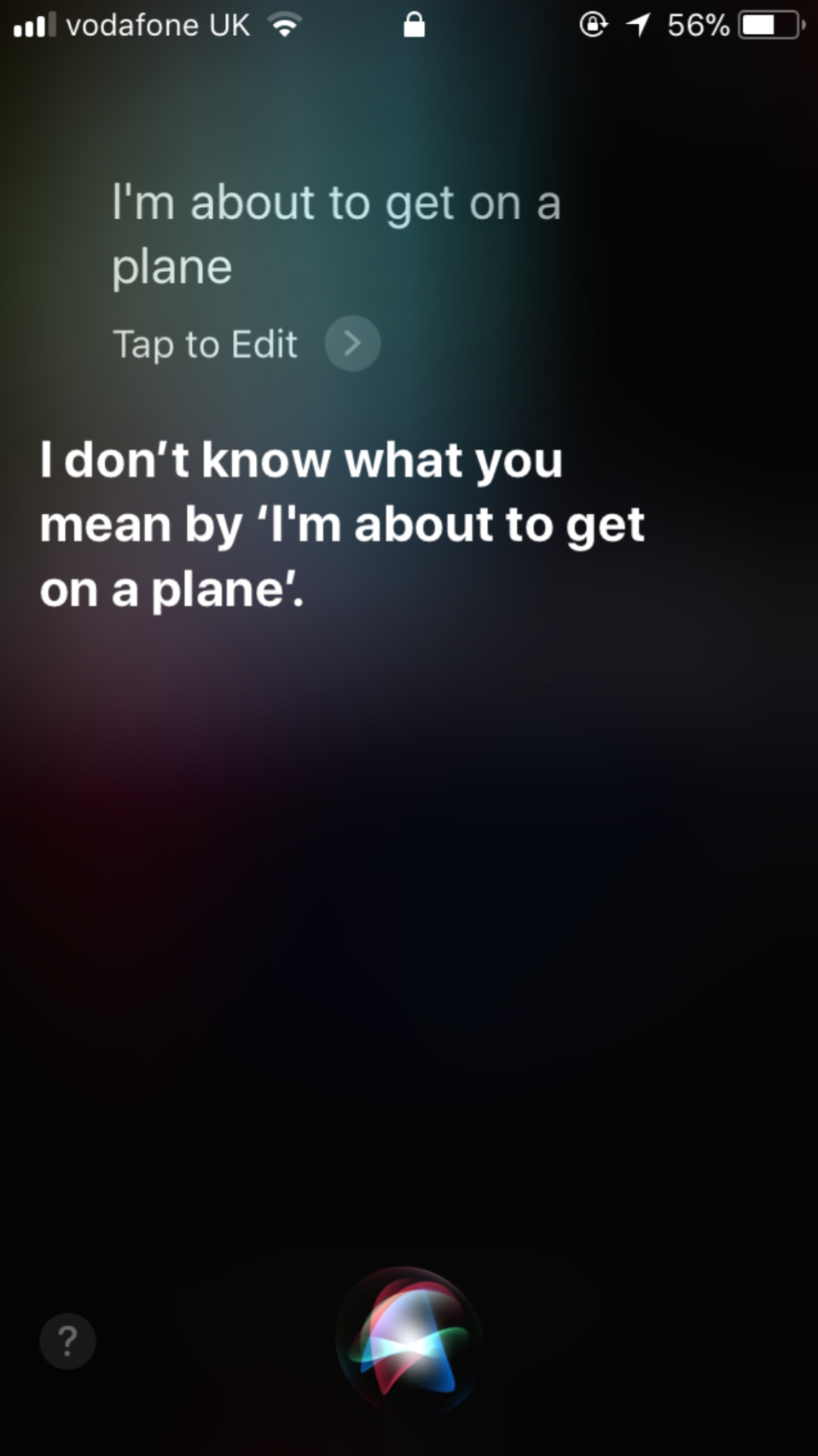

Using natural language only exacerbates the potential of making mistakes by the user. In Apple’s online document on the potential things Siri can do for you [28], it shows that you can ask indirect questions such as Im running low on gas (Meaning Where is the nearest gas station?) to Siri and it will give you an appropriate response. But in showing this capability it gives the user a false sense that Siri actually understands what an indirect question is.

|

|

|---|---|

| Finds Correct Response | No Understanding |

Figure 5: Apple’s implementation has an inconsistent user experience

A user could expect that by telling Siri they were about to get on a plane, that the system would be able to understand that this means flight mode should be enabled, given that the program understands indirection in other areas. This common problem of only becoming useful once asked in a specific way is, I believe, one of the greatest barriers to personal assistants mainstream adoption, despite all the work that has been done to improve them in the past five years.

Just as people made over-statements on the ability of ELIZA [29], similar expectations are being levelled at current natural language technology with some arguing that instead of phone applications, companies will create chatbots for selling their products [30]. Unfortunately the truth is, sometimes there is no shortcut for good user design. In attempting to create a more natural user interface, many personal assistants have only been able to create a system that is less powerful and arguably less user friendly than a Unix terminal.

A Promising Future

research on computer science. AI has worked its way from more simple traditional AI techniques such as natural language processing and tree searches onto the more sophisticated areas of artificial neural networks. Many of the greatest AI advances are now being made in the world of private industry, where previously they would have been confined to the university, giving consumers fast access to all of the latest technology. Although with private industry it is often difficult to distinguish between a new revolutionary technology or just a well executed advert, there is no doubt that given the great speed at which the industry has advanced AI in the past five years, that similar greater advances will be made in the next.

References

[1] A. M. TURING, “I.COMPUTING MACHINERY AND INTELLIGENCE,” Mind, vol. LIX, no. 236, pp. 433– 460, Oct 1950. [Online].

[2] J. Weizenbaum, “Eliza computer program for the study of natural language communication between man and machine,” Commun. ACM, vol. 9, no. 1, p. 3645, Jan. 1966. [Online].

[3] Apple INC., “Apple face id.” [Online].

[4] “Computer ai passes turing test in ’world first’,” BBC News, Jun 2014. [Online].

[5] K. Warwick and H. Shah, “Good machine performance in turing’s imitation game,” IEEE Transactions on Computational Intelligence and AI in Games, vol. 6, no. 3, pp. 289–299, 2014.

[6] “Ibm’s watson supercomputer crowned jeopardy king,” BBC News, Feb 2011. [Online].

[7] “Ibm watson: How it works,” Oct 2014. [Youtube].

[8] IBM, “Ibm watson.” [Online].

[9] A. Levinovitz, “The mystery of go, the ancient game that computers still can’t win,” Wired, Dec 2014. [Online].

[10] D. Silver, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Van Den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot et al., “Mastering the game of go with deep neural networks and tree search,” nature, vol. 529, no. 7587, pp. 484–489, 2016.

[11] “Artificial intelligence: Googles alphago beats go master lee se-dol,” BBC News, 2016.

[12] IBM, “Deep blue.” [Online]

[13] M. Muller, “Computer Go”, Artif. Intell., vol. 134, pp. 145-179, 2002.

[14] J. Schaeffer, “The games computers (and people) play,” in Fortieth Anniversary Volume: Advancing into the 21st Century, ser. Advances in Computers, M. V. Zelkowitz, Ed. Elsevier, 2000, vol. 52, pp. 189 – 266.

[15] B. D. Abramson, “The expected-outcome model of two-player games,” Ph.D. dissertation, USA, 1987, aAI8827528.

[16] S. Dreyfus, “The computational solution of optimal control problems with time lag,” IEEE Transactions on Automatic Control, vol. 18, no. 4, pp. 383–385, 1973.

[17] “Waymo.” [Online]. Available: https://waymo.com/

[18] Waymo, “Navigating city streets,” Dec 2016. [Youtube].

[19] “How simulation turns one flashing yellow light into thousands of hours of experience,” Sept 2017. [Online].

[20] A. Marshall, “The human-robocar war for jobs is finally on,” Wired, Sept 2017. [Online].

[21] O. Solon, “More than 70% of us fears robots taking over our lives, survey finds,” I: The Guardian, 2017.

[22] “Are self-driving cars legal,” hg.org. [Online].

[23] M. Richtel and C. Dougherty, “Googles driverless cars run into problem: Cars with drivers,” New York Times, vol. 1, no. September, 2015.

[24] “Lorry driver job description,” Totaljobs. [Online].

[25] “Taxi driver job description,” Totaljobs. [Online].

[26] A. Willis, “43 questions to ask siri if you want a funny response,” Metro UK, Oct 2017. [Online].

[27] C. Milanesi, “Voice assistant anyone? yes place, but not in public!” Creative Strategies, Jun 2016. [Online].

[28] Apple INC., “Siri.” [Online].

[29] “Professor Joseph Weizenbaum: Creator of the ’eliza’ program, orbituary,” Independent UK, Mar 2008. [Online].

[30] A. Plumlier, “There will be a bot for everything,” The Verge, Apr 2016. [Online].